FROM THE INTERFACE RESIDENCE TO “ALGORITHMIC DRIVE”: A TWO-WAY JOURNEY

[ “Driverless Car Afterlife”, digital print (2017).]

The following text is divided into two parts: first, an in-depth interview with the artist François Quévillon, the very first to have taken part in Sporobole’s Interface art-science residency; then a short reflection on the exhibition “Algorithmic Drive”, presented at Sporobole in November 2018.

From the Interface residency to “Algorithmic Drive”: a two-way journey

In 2014, François Quévillon began the very first residency of what would become Sporobole‘s Interface program, which supports an art and science project every year. In close collaboration with the Université de Sherbrooke, the program involves the collaboration of many researchers, affiliated with a diversity of research fields ranging from sound engineering, neuroscience and microbiology to the processing of materials such as glass. These are just a few examples. In the case of François Quévillon, sound mapping and machine learning led him to develop a whole corpus of works that were the subject of a BetaLab exhibition at Sporobole last November. Entitled “Algorithmic Drive”, the exhibition featured more than ten works: installation, interactive device, digital print and videos, and offered a vast overview of the artist’s research over the past four years.

At the beginning of 2019, I spoke with François Quévillon who told me about his experience of residency and the work around the works presented at Sporobole.

– Can you talk a little bit about the beginning of the project: where does the idea of working on machine learning and sound mapping come from? How do these two aspects relate to each other?

First of all, it must be said that the Necotis Group (Computational Neurosciences and Intelligent Signal Processing) was among the first to express an interest in the research-creation project, but I continued to meet people because there was no researcher in the group available at that time and also to see all the possibilities. So I met researchers in different fields: in microfluidics, nano characterization, biophotonics, robotics too. A process that took several months and was originally intended to include a social sciences and humanities researcher. Machine learning and sound mapping… It really emerged on the one hand with the Necotis research group, after meeting Louis Commère, a student who was interested in sonifying both biological and artificial neural networks, and another researcher who was a PhD student, Sean Wood, who was working on the “cocktail party” effect. Let’s say there are a hundred people in a room talking, it would be a matter of isolating a voice for those who can’t follow a conversation when it’s very loud. So how to isolate a voice in space and noise… I found that the sonification of neurons had often been approached artistically so that it would be our common research project: there are artists who have been working on it since the 1960s, who have sonified the activity of the brain, the body in general, etc. So we did the opposite: rather than sonify a neural network, why not generate a structure from sounds, which gave us the idea of sound mapping. Then with Sean Wood, who was working on the cocktail party effect, we thought: rather than isolating a voice, how could we create a machine that doesn’t listen to humans? It was really a question of moving away from a surveillance dynamic, of imposing obstacles on the machine so that it would not succeed in perceiving a human voice. So we tried to do something at the crossroads of our respective practices while being out of step with them. Generally I work more on problems related to the image, and here it is a project rather focused on sound that has emerged… It is a little bit what determined the project.

– If I understand correctly, the residence started with a research on the question of sound then, in a second step, it went towards machine learning…

Yes, the research group is already working on artificial intelligence and developing robotic systems, among other things. They work a lot on sensory substitution as well. Louis Commère, for example, is currently working on the sonification of objects using 3D sensors or cameras: it involves digitizing an object in 3D and translating it into sound. Then there is Etienne Richan, with whom I worked more recently, whose process is a bit the opposite: he generates textures from sounds. In both cases, they are working on ways to help people with visual or hearing disabilities, for example translating sound information into images or the other way around for the visually impaired, thus translating – in a way – space into sound. Facebook, Google and others also conduct artificial intelligence research that highlights the benefits for people with disabilities and their positive social impact, but some aspects of these technologies can also be used for advertising targeting or for less noble purposes. Of course, we all want to help people who have difficulty hearing or seeing, but this research can be used for something else.

– Yes, it can be used for good or for evil…

Yes, that’s it…

There is a critical and poetic dimension, but also a conceptual, technical and aesthetic dimensions to this project, which we were trying to balance. They have a great artistic sensitivity in the [Necotis] group. I introduced them to some artists, but there were some they already knew, especially in relation to artificial intelligence, which in recent years has become a popular subject in the art world. It was really the beginning of a certain wave in 2015, with Google’s Deep Dream, among others, and then many psychedelic “morphing” projects, artificial neural networks that were beginning to emerge. Among other things, I told them about David Rokeby‘s work “The Giver of Names”, which dates back to the early 1990s. An object was placed on a base, with a camera watching it, and then the system generated poetic content from what it perceived. It is a project that was already very advanced for the time.

Another element that initially guided our project was the place where we were going to work, at Sporobole. You know the space: there are plenty of surveillance cameras around the art center. It is a highly monitored space and it is not known who owns some of the cameras and whether they really work. As you know, just above the Sporobole’s main entrance, there’s a huge surveillance camera: I think it belongs to the city, maybe the police department. There are others in the alleyway where restaurants, bars and then others near the condos are located. There are at least 20 surveillance cameras around Sporobole. So there were a few questions about that too, the issues surrounding surveillance, and how to make a device that is not a control and surveillance system: it also guided our research.

– And how did you use the cameras around Sporobole?

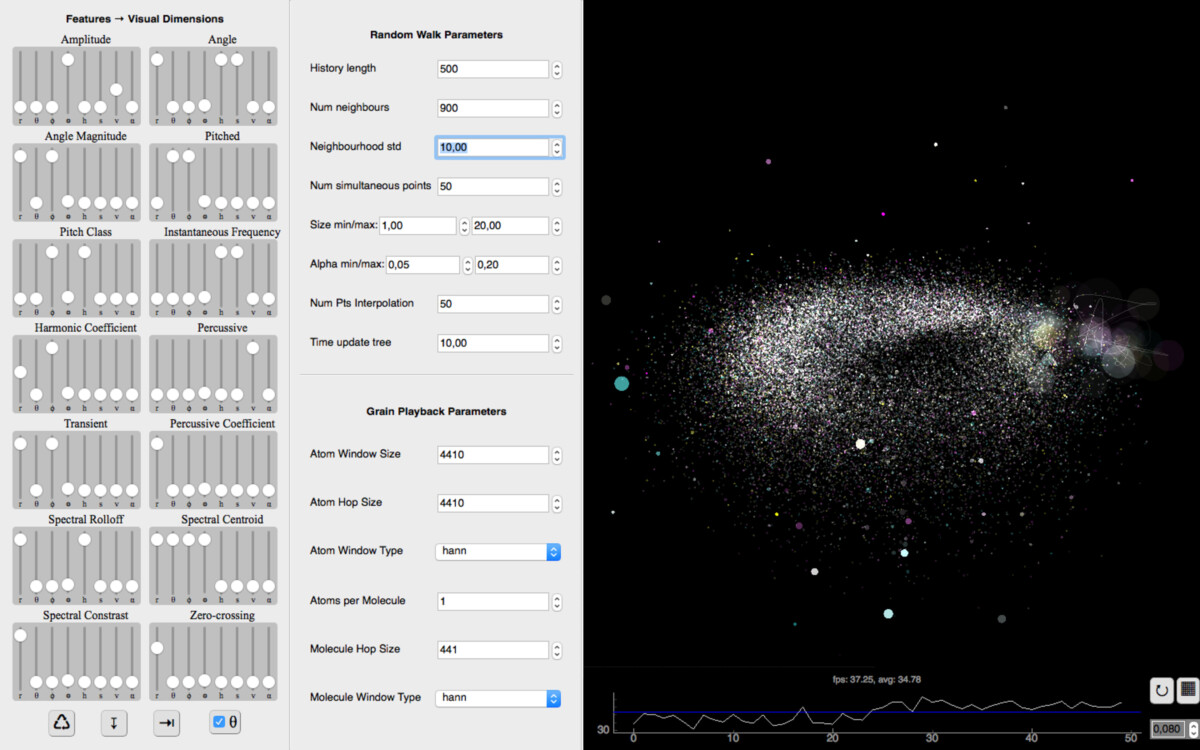

We did not use the cameras, but we thought, for example, we would make a sound map of the place that would move away from the idea of surveillance, that is, that does not capture conversations, does not monitor cars, but fragments and scrambles the course of events. Then we decided to pick up noise from the roof of the building, but with very short discontinuous pickups. We finally put so many obstacles in the way of the system that in the end it’s just noise, sound chaos. Its way of reconstructing a composition is also very uncontrolled. The system works with a random walk algorithm, a stochastic process. Let’s say you get to a street corner, you can go left, right or straight ahead. It is a bit the same principle, there is a random choice of direction within a network of defined paths. It was constrained by sound particles classified in different ways: low frequencies, high frequencies, high volume, low volume, percussive aspect of the sound, etc. What constituted a space… The system interface includes twelve parameters: amplitude, angle, height, pitch class, frequencies, harmonics, percussive aspect, transient aspect, spectrum, centroid spectrum, spectral contrast and zero crossings. All these parameters can be mapped. Similar sounds tend to group together, but we can also tell the system to work rather by contrasts, then determine a rhythmic structure from that….

– And it’s a program that does that?

Yes, it’s a program we developed together. So after doing the sound mapping of Sporobole, I tried different things that seemed to me to have both a poetic side and a scientific validity. For example, to generate a cloud from storm noises. I extracted four days of storm recordings on the Internet and rather than using very short samples of one tenth of a second I used complete seconds that are mapped according to different characteristics: the thunder sounds are in the same place, then we have rain, different rain frequencies, the higher we go up the higher the frequencies, then the lower the frequencies… Then there is a line that generates an endless composition. You’ve seen the cloud, it’s always a fixed configuration, you turn around but the cloud doesn’t move… Here, for “Murmuration”, where you hear the birds tweeting and chirping, I changed the parameters: the amplitude was replaced by the percussive aspect, then the percussive aspect became the spectrum, so it changes the position of the points, then by reconfiguring the points it changes the possible connections between the points. So the composition that is generated by bird sounds depends on the layout of the information in this space.

– And this system works randomly?

It’s a controlled, or rather, oriented hazard, and that’s what I like: I know about what can happen, but it’s always surprising. It’s not completely random, everything is very structured but evolves in an unpredictable way.

– It evokes a lot of the sound of a radio, when you’re looking for a station…

Exactly! Yes, you’re not the first person to tell me that. It is really a different type of spatialization, the sound environment of Sporobole is translated into a non-Euclidean geometry. It is like mapping in an elliptical spherical space, with the center and different axes, which also allows to make different types of compositions.

– So these are three sound mapping exercises that resulted from the residency…?

Yes… The one I just mentioned was the first and most chaotic, the noisiest: we don’t understand anything about what’s going on because we really wanted to get as far away as possible from a surveillance dynamic.

[Graphical interface of the prototype developed during the residency.]

– Is this a failure of the surveillance dynamic?

In a way, the system tries to make sense from noisy micro-fragments.

To get back to the residency… At first there were different parameters that slowed down its beginning. And I wanted to get out of my comfort zone. I could probably have continued what I was doing with “Dérive” and worked in 3D scanning with more sophisticated instruments. Another track was the clean room where there are, among other things, atomic force microscopes. I could have worked there to create images with microscopes, but it’s like a cliché when you think of art-science relationships. I wanted to go to a territory that I had not yet addressed. Maybe work more on sound, then work with context, place, Sporobole, noise, monitoring, different issues related to data collection and algorithmic procedures. Which reminds me: recently I read that someone asked for the recordings of his device Alexa from Amazon, and they sent the recordings of another person, 1700 recordings in another house! There are more and more systems like this, let’s think about cars… That’s what led me to the project that followed. Cars are networks of mobile sensors, increasingly connected and equipped with “intelligent” systems, and it is not clear where some information is going. So yes, it’s another issue that interested us with the research group, but it was a lot of different layers, let’s say. At first I had no idea where I was going with this project and it was through encounters that it developed.

– And with the residencies that finally followed… Because there was the Interface residence and what came out of it, but you took all that elsewhere afterwards….

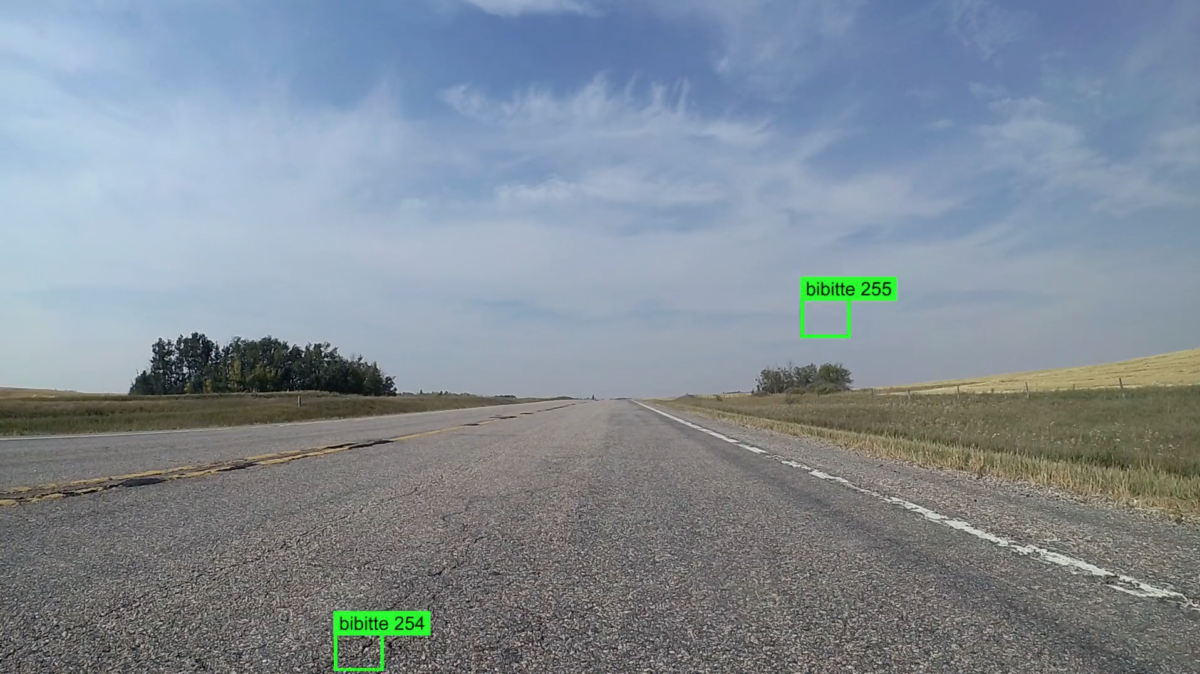

Yes, that’s right, we’re coming to the next project… Especially at La Chambre Blanche, and I was also involved at UQAM in Jean Dubois and Alexandre Castonguay’s research group on operative images, an aspect that has interested me since the beginning of my practice. You wrote a little about this in 2012 for my residency in Brazil at LABMIS: the operative representations were discussed and I talked about Samuel Bianchini‘s work and the images that act by themselves and on which we can also act. I came back to that: it was therefore a question of continuing what we had developed here during the residency in audio analysis, but going further by analyzing the images and data of the vehicle. For the past two years, I’ve been shooting with a camera that I install on my front bumper. I recorded sound and images, and as the camera is connected to the car’s on-board computer, other information was added: my speed, geographical coordinates, camera vibrations, data from the engine too, coolant temperature, etc. Really a data overload. Then in a second step, with a similar structure, with a random walk algorithm and some controllable parameters, procedures come to determine the course of events. There is a link with “Waiting for Bárðarbunga” also, where data coming from the computer’s sensors generates the film editing in real time. It is a form of visualization of the machine’s activities, so the idea was to take what we had achieved a little further and create an interface that allows us to interact with the system.

[Detroit video and data capture for Algorithmic Drive.]

– And in what context did this project emerged?

It emerged slowly. I started working on this in 2016: I had different ideas, and I wanted to work again with the research group, but Sean Wood was going out of the country for a very long time and Louis Commère was very busy, so either I was developing it by myself or I was working with a new person. Then I met a new member of Necotis in 2017, Etienne Richan, who is also interested in what is happening in the artistic world. It’s really great to work with him on this: we’ve been working together for about a year.

– So you met him after your residency: in off-residence mode?

Yes, that’s right – or post-residency – more than a year after my residency at Sporobole there were multiple periods of research: ideas, technologies, collaborators, funding… Autonomous vehicles, in 2015-2016, were really what was starting to raise many issues related to artificial intelligence, but in a really concrete way. In fact, among the links I sent you is the Moral Machine – an online platform that explores the moral dilemmas related to autonomous vehicles. It addresses various problems related to artificial intelligence systems, with all kinds of ethical and moral, but also cultural issues: in the event of an impending accident, is the life of a child or an elderly person protected as a priority? If you are in North America, the child is considered more “important” than the elderly person, but in some places in Asia, it is the elderly person. The same question arises about gender, social status and health status… So not only is there not really a good answer, but it varies from one part of the world to another. And would we want a car that protects our lives, or the lives of people on the outside? And would it be possible to program this yourself? Is it acceptable to be able to adjust the level of protection that is afforded to each other or to others…?

– How to manage the issues related to the notion of “danger” that are related to driving…

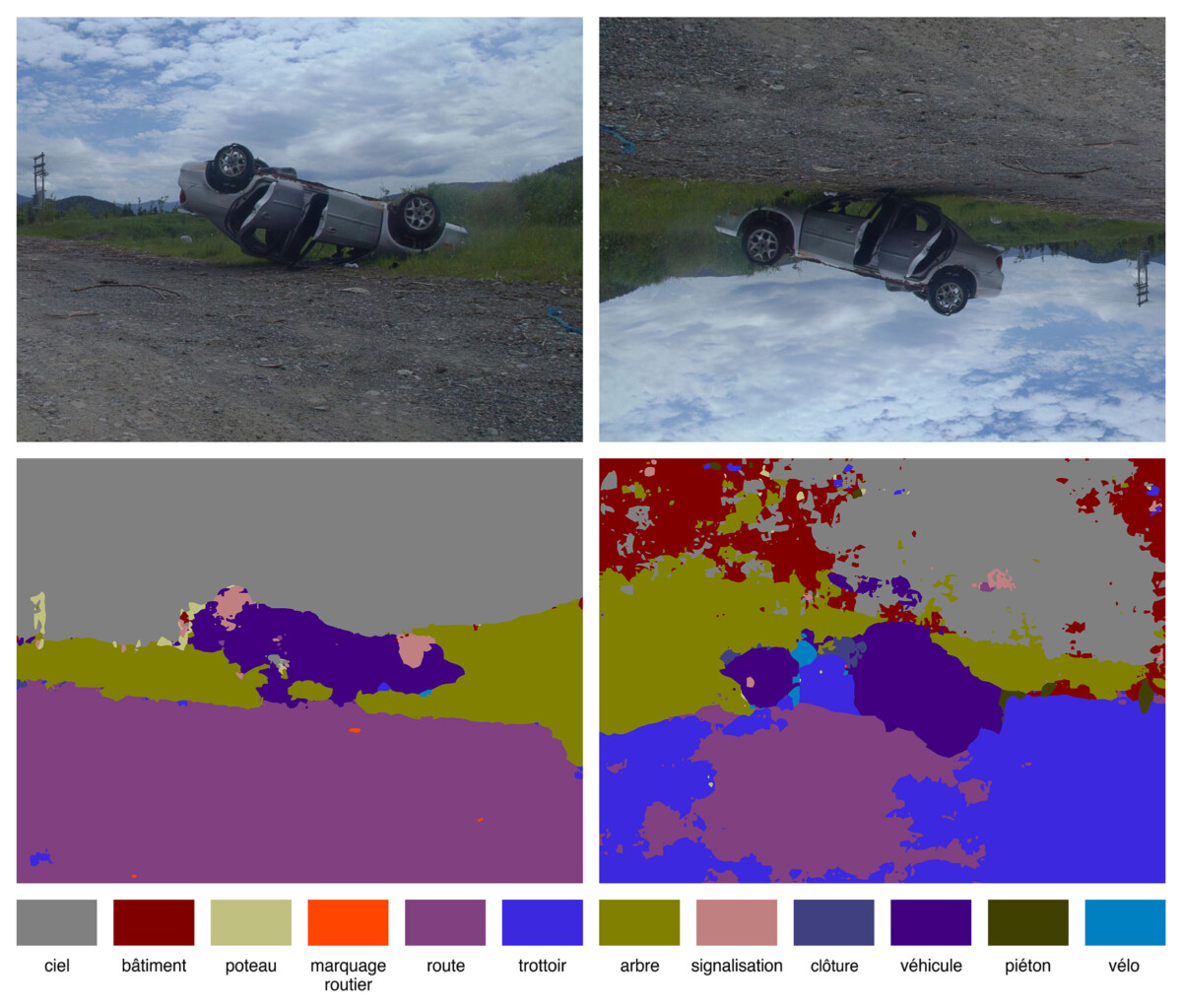

Yes, and the way the systems are developed is also often in California or the United Kingdom. For example, the system I use here is SegNet, and it is trained with images that differ from the winter conditions in Quebec. If I use it in a snowy environment, what happens? There’s white everywhere… Or at night, what does he perceive? Which brings me to the dash cam compilations – are you familiar with that? – it’s very popular on YouTube, there are thousands of them. All kinds of unlikely, unpredictable accidents, particular contexts… Like in Fort McMurray, for example, in a forest fire, what happens: you have a computer vision system or a LiDAR, with smoke and projectiles coming from everywhere… If there is a flood, for example, what does he see? Or a tornado, a hurricane…. A few years ago these videos came mainly from Russia and Asia, where drivers installed cameras on their dashboards because people caused accidents, then they would sue the driver. The camera was an eyewitness in case an accident happened, to protect yourself. Another example: a meteor fell in the middle of a village and the fact that these cameras are constantly recording made it possible to capture the meteor from several different angles. This is another type of operative image that takes on legal value and where we can see the unpredictable happen: situations in which this type of system would sometimes become ineffective.

– Yes, well, already, it can be destroyed… Then if it is not, it will be a capture of chaos, confusion…

Yes… And also, what could be interesting would be to train the system from these compilations of dash cam recordings. So from a very catastrophic vision of the world, then see what happens… This idea has led to the emergence, not only of the interactive installation “Algorithmic Drive”, but also several elements of the series “Manoeuvres”.

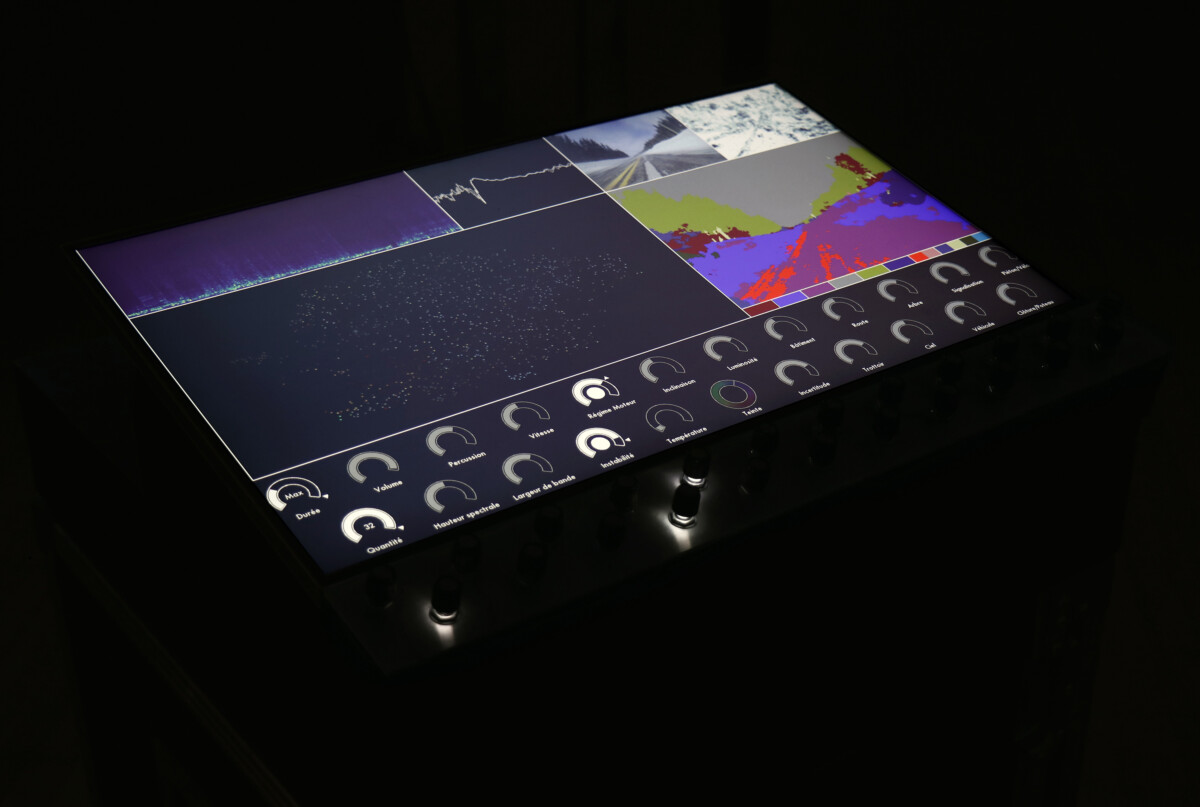

[“Algorithmic Drive”, interactive installation (2018). Daïmôn.]

[“Algorithmic Drive”, interactive installation (2018). Expression]

[“Manœuvres“, video series, 2017-2018.]

[ Working document juxtaposing videos of autonomous vehicles and dash cams (Youtube).]

– So here we’re talking about a video compilation? Just to be sure…

Yes, it is a working document. In fact, on the left we see different computer vision systems that are embedded in autonomous vehicles, then on the right they are compilations of dash cams. I simply show two opposite types of perception of reality if you will, two types of operative image systems. This gives the impression, on the one hand, that the world can be controlled, that autonomous vehicles will drive better than humans, but at the same time they will find themselves in all kinds of situations where it will not work.

– It will be defeated….

Yes, like the woman who was hit by an autonomous Uber vehicle in Arizona and died. In fact, it was a case of “false positive”: from what I understood, the system first perceived her as a human being, but afterward, it thought to itself: it may just be a bag… !

– “Maybe just a bag”?! Wow!!!

Yes, well, if it’s a rock or a bag, what seems pretty easy to identify to us is the result of years of experience in the world, but how does a camera or a LiDAR evaluate the resistance of an object that is unusual to it, or if it’s alive or not, it’s something else…

– It’s still disturbing, because you said the word “maybe” so it implies that there was doubt… Which means that the machine is able to doubt and if there is a form of possible doubt, how come it’s “allowed” to absorb that doubt? It’s very disturbing…

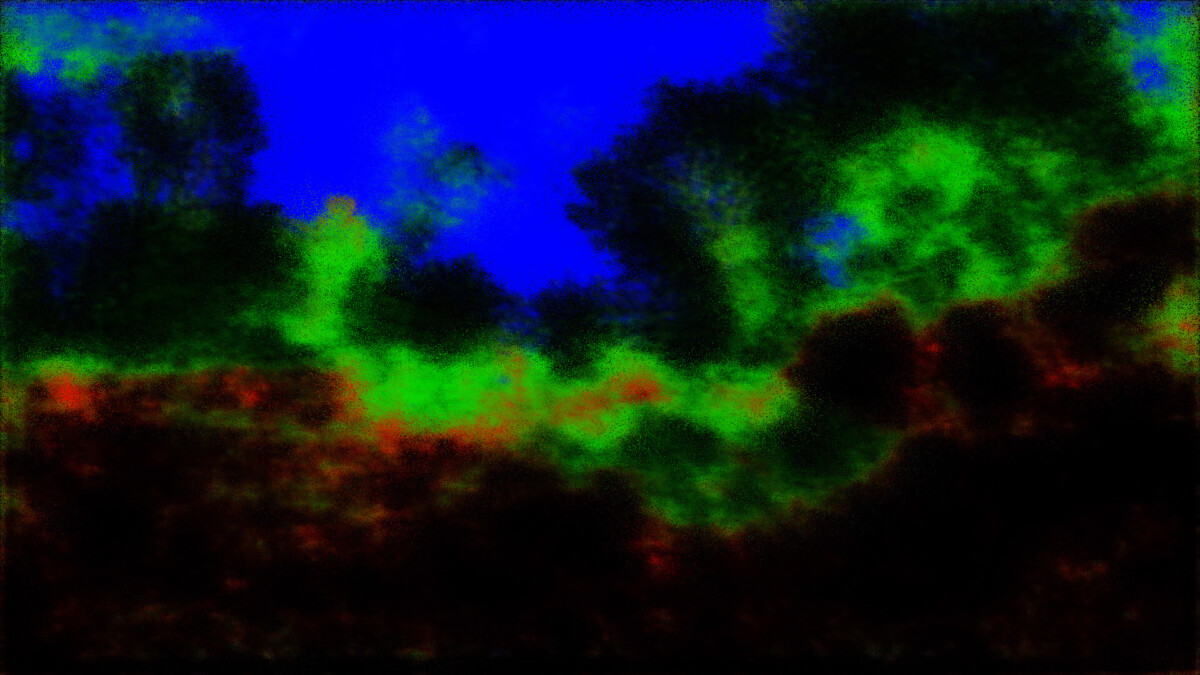

Yes… At the top right on the “Algorithmic Drive” monitor, there is also the uncertainty model that is generated. There is a semantic classification: sky, road, trees, buildings, etc. In particular, I worked with this type of model for the Expression exhibition: I circulated in St-Hyacinthe and what we see in black is what the system has difficulty interpreting, so it is the machine’s uncertainty model… The electrical wires for example: when there are lines in the sky, it has difficulty identifying, “Is it a building? Is it the sky?”. This makes the uncertainty model high.

[“Mapping Machine Uncertainty”, installation (2019) and “The Iterative Roundabout”, from the “Manoeuvres” series (2017-2018). Expression.]

[“Uncertainty Levels”, video (2018). Sporobole.]

– It is not sure if the line delimits a volume or if it is empty…

That’s right, and it’s a measure that is intended to be objective, but it’s very poetic at the same time. Also, if you go to the same place three or four times, for different reasons, the value changes: does someone cross the street? Has it snowed? Or, what is the intensity of the ambient light? It is never the same value and, in this sense, it is also more or less valid as a map: it represents an interesting paradox… I am developing different projects around these “phenomena” but I am not sure of their status. When I talk about this device [“Algorithmic Drive”], I get the impression that it is neither an interactive installation nor a performative device. In any case, I see it a lot as a research instrument. Kind of like what I did during my residency: it was a system [a computer program] that led to different experiments and acted as a driving force to think about these questions.

– And how does the device work?

Yes, we can try it….

– Is it meant to be participatory?

Yes, well, either you let it work autonomously or you try to control it. It is as much a control interface as a data visualization system. There are twenty-one parameters and two buttons for controlling the duration and connections between videos. Among the parameters are sound amplitude, vehicle speed, engine speed, image parameters, temperature, etc. So you have twenty-one dimensions that are reduced to two dimensions: it takes the twenty-one parameters and then it generates a map, a two-dimensional image. It is also a self-monitoring system because it records the vehicle’s activity, including its speed…

About the title of the work: in French it is “Conduite algorithmique” and in English, “Algorithmic Drive”, which have two different meanings. In French it relates to the notion of behaviour, but in English it evokes a driving force or a compulsion – the drive that delegate tasks to algorithms.

[“Algorithmic Drive”, interactive installation (2018). Expression.]

– And that’s also the title of the exhibition…?

Yes, because, as I said, it is a research instrument that has generated many other things, that has fed reflections and works in parallel.

– Works that were presented in the exhibition at Sporobole…?

Yes… Precisely there was also the rearview camera that I recontextualized. Now all new vehicles sold in Canada have a backup camera, I don’t know if you were aware of that? I learned a few months ago that it has become law. They come with a camera and it’s yet another type of operative image. This is a good example: we don’t care about the aesthetic value of what it captures, the camera is there to help drive, to park. I had recontextualized it and installed it just below the projector, so it monitored the device, it monitored the operator if you will, and the small monitor offered another point of view on what was presented in Sporobole’s window. The ultrasonic sensors usually installed in the bumpers to measure the distance from the nearest object were positioned here on the microphone stand, on which the rear view mirror was installed. The distance of people approaching it was displayed on the screen and an audio signal made it audible. The device has its own integrated security system.

[ “Rétroviseur”, installation (2018). Sporobole.]

[“The Iterative Roundabout”, from the “Manoeuvres” series (2017-2018).]

– Okay yes….

If we think about the visual cortex, it’s a bit the same idea, the first things that the artificial perception system I used “sees” before making a semantic classification are RGB channels that separate different areas, the sky, buildings (and different things) and the road. Then gradually it adds different categories. Here at the MNBAQ, I turned in circles, hence the title [“The Iterative Roundabout”]. It’s a sixteen-second loop and you can see the roundabout in different states: buildings in red, vegetation in green, but the system always hesitates between the road and the sidewalk because the road is snow-covered, so is it the road? Is it the sidewalk? Then he stays in an indefinite state, where he goes round in circles to infinity because he is uncertain about what he perceives… Basic problem of an artificial vision system in Quebec in winter (laughs).

What is also interesting is that for the past two years I have had exhibitions and residencies in different places. A residency in Newfoundland, for example, where I worked on it, often indirectly by going to destinations or sites on excursions. Then I had my exhibition at Paved Art [in Saskatoon] where I crossed part of Canada and the United States. I have accumulated a lot of content in all kinds of conditions….

– I also perceive an aesthetic or visual strategies that remind me of video games…

Yes, well, one interesting thing is that in addition to training autonomous vehicles on the road, they are also trained with hyper-realistic video games. There are scientific simulators out there, but the images and scenarios I saw were rather basic, but you install the system in Grand Theft Auto and there are all kinds of things going on: the world is really complex, you can change the weather in a pretty realistic way… The games become more interesting environments than scientific simulators or than reality because there is less danger. It’s another area I’d like to address, the link with video games….

Another issue that I find interesting about this project is the environmental impact of cars. We could talk at length about it, from the transformation and organization of the territory they have generated, to that resulting from their components, operation and fuel production. Many of my works deal with climate change and environmental issues, but my activities have a significant ecological footprint.

– But these are observations that you make, that you interpret, it is not an activist approach…

No, indeed, but of course that is part of my concerns and I wanted to reveal this dimension of my work through this project. Artistic practices often have a significant ecological impact: air or road transport, temporary constructions, materials, equipment and their energy consumption, for example. I was thinking of integrating measurements of my pollution into the system, but I was unable to extract fuel consumption or emission data from the on-board computer. Is it related to the scandals of companies that distorted their results or a technical issue, I don’t know… There are other methods to calculate them but I found it curious.

– All this makes you think… Thank you François!

///////////////////////////////////////////////////////////////////////////////////////////

François Quévillon has been exploring the topics of autonomous vehicles and machine learning for several years now. A first presentation of part of this corpus of works took place in DAÏMON in October 2018, then “Algorithmic Drive” – of greater scope and integrating in situ creations, as well as the works from the Interface residence – was presented at Sporobole from 5 to 24 November 2018. “Manoeuvring the Uncontrollable” (curator: Éric Mattson), currently presented at Expression in St-Hyacinthe – until April 21 – is another iteration: more elaborate in terms of “scenography” and more complete too – with “Dérive”, “Waiting for Bárðarbunga” and “Variations for Strings and Winds”, it is almost a mid-career retrospective.

Among Quévillon’s recent works, many present themselves as viewing devices: machines, screens and projections lend us their eyes – and this borrowed look is our main connection with the machine. We will remain on the threshold of these recorded images – filmed, photographed – then carefully analyzed by a system responsible for monitoring reality and its short-term threats. Most often, however, nothing happens. For example, we could watch hours and hours of dash cams without ever seeing anything other than the banality of reality and its micro events: a fly flies away, a bag is moved by the wind, a rain shower begins. Behind the eyes of an on-board camera, however, there is a priority point of view. Whether there is an event or not, it’s like being in the front row of the show. And when there is a show, it almost means that there is a disaster. The re-enchantment of everyday life rarely takes place on Highway 20. On the other hand, the breakdown, collision, pile-up, rollover or even the explosion – punctum of the spectacular -, there is to see: “exit” the usual order of things; and “off the road” at the same time.

It is this gaze – full of blind intentions – that Quévillon shows us with the “Manoeuvres” (2017-2018), a series of six short videos that each superimpose a sequence of reality with an analysis filter for embedded cameras. Among these filters, one shows the system struggling with weather conditions that render it inoperative in less than a minute (“Peripheral Vision”); another analyzes its entire environment in night vision on mapped trails for recreational vehicles or hikers, the images analyzed are then those of a situation where the car is entangled on an inappropriate road (“Lost in the Forest”); the other visually translates the vehicle’s velocity (“The Crossing”); the next one engages in a vain digital capture of insects (“Bug Tracker”); then another shows us the direction of elements moving in front of it (“Flow”); finally one tries to identify the objects that surround it with a questionable accuracy, then the filter gradually disappears to let us see a roundabout (“The Iterative Roundabout”). This “package” of visions, each with its own particularity of image analysis, shows us the extent of the “meta-analyses” that autonomous vehicles can generate to date. And this is only the beginning. Moreover, these involuntary visions carry a certain amount of poetry within them. But the machines don’t know that – and you shouldn’t tell them, they might think they’re superior and want to audition for Asimov’s “Robot” series – isn’t that a Netflix project? I don’t see what they wait for….

[“Bug Tracker”, from the Manoeuvres series (2017-2018).]

“Algorithmic Drive” is the title of the exhibition that was presented at Sporobole, and it is also the title of the matrix work from which the other proposals in the corpus are derived. We have already talked about it a little bit in the interview: it is an interactive device that presents itself as a system for controlling image sequences, which can be modulated according to the twenty-one parameters available on the console, in addition to the duration control buttons and the number of videos selected. In practical terms, the manipulation of the device makes it possible to visualize a whole typology of sequences and to “process” or “mix” them based on image, sound and data analysis parameters extracted from the vehicle: duration, quantity, volume, spectral height, impact, bandwidth, speed, instability, engine speed, temperature, inclination, hue, brightness, uncertainty, building, sidewalk, road, sky, tree, vehicle, signalling, fence, pole, pedestrian and bicycle. “Algorithmic Drive” (2018) – the installation – makes it possible to exert a posteriori control over images which, a priori, can only be passively monitored. It is a selective collection: a collection of catalogued sequences. We could also see it as an archiving system, a classification of what escapes us, what happens and what we don’t see most of the time. It also means that there are a multitude of embedded cameras that are in a position to see the unexpected. And there are more and more: installed on cars and part of the basic equipment – even mandatory. However, these cameras are connected and the most sought-after data at the moment are geolocation data. On the other hand, motorists have difficulty doing without the GPS service, which has become a real personal assistant during our travels. All this is not just the effect of a happy coincidence – or unfortunate, it is depending. Our capitalist hunger for data is endless. “Our” is us, but without us. It is the capital that works without our knowledge, which has long been on autopilot. Disempowered, the machine runs, moves forward and devours, records and archives, hoarding.

But to conclude that this is absolute evil would be to turn the corners smoothly and a little quickly. This evil is not absolute, it is certainly inclusive, but it sometimes shares its mischief with other imperatives, such as the establishment of statistics on road mortality via recorded data. Neither good nor bad in the end: moneyable is in itself the absolute imperative that binds us to these technologies. What has a value of exchange is to be constantly invented: creating needs to sell is not new. What is perhaps a little more so is that with data, we see that the information is unlimited, that everything can be transformed into information on the one hand, and on the other hand, that the information itself is constantly transformed, every millisecond. This is certainly a motivating factor in increasing the presence of on-board cameras and devices to Internet-connected vehicles – for better or for worse.

Not all of the technological devices currently integrated into cars are connected. One of these is the rearview camera. The installation “Rétroviseur” (2018) was created by creating a meeting between two elements: the mirror that becomes the screen – isn’t that always a little bit the case? – and where you see what is captured, that is, yourself, from another point of view. The plunging perspective recalls an omniscient posture, also evoking a certain authority – that of technologies over our lives? Put in context in the gallery space, the rearview camera, integrated into the rearview mirror, is installed on a tripod which is placed in the angle of a projection where a road runs along. In doing so, it is an abyssal effect that is then staged before our eyes: the image showing marks that are supposed to measure and guide driving, also shows that the road to be used is itself an image of a road, and in a way, a “behind-the-scenes” that the rear-view mirror reveals.

One can also wonder what Crash would be like in the era of data harvesting, dash cams and autonomous vehicles. Would more predictability force Ballard/Cronenberg to create even more reckless characters? Or, conversely, would these tools allow us to plan with alarming accuracy the magnitude of our accidents – for the Vaughan and other Dr. Helen Remington of this world? The danger of our motorized paths is now easily measured: uncertainty models and semantic data segmentations are means of measurement and analysis that reveal a meta-vision of reality that projects us, in a way, into the immediate future. A future, which is also a present, where things may not be as we expected them to be: where the crossing of a multi-storey car park is both perfectly passable and yet totally impassable, as shown in the video “Uncertainty Levels” (2018); and where the other side of reality is just as valid, if not more so, than reality itself, as suggested by “Driverless Car Afterlife” (2017). The on-board camera records and reveals a time without time, where reality is given as a multiple. The images and image analyses that constitute this new regime of gaze condition us more and more to see and perceive differently: not only can we easily project something other than what they are, but they contribute – as J. G. Ballard says in the short documentary essay Crash! (1971), which preceded the publication of the book in 1973 – to create new emotions and feelings. By transforming the way we perceive reality, technologies make us see the world through their lens – their world becomes ours.

– Nathalie Bachand

[“Crash” (1996), David Cronenberg (Google Image).]

/////

Photographic credits: François Quévillon.